Matlab Program For Dolph Chebyshev Array Meaning

MATLAB Toolbox. The text makes extensive use of MATLAB. We have developed an 'Electromagnetic Waves & Antennas' toolbox containing about 200 MATLAB functions for carrying out all of the computations and simulation examples in the text. Hi all, I am serching for some matlab codes simulating a dolph Chebychev array, and also I want to know, if it is possible to apply this method for 2 d array (rectangular). Thanks in advance and best regards.

A collection of MATLAB routines for acoustical array processing on spherical harmonic signals, commonly captured with a spherical microphone array.

Archontis Politis, 2014

Department of Signal Processing and Acoustics, Aalto University, Finland

This library was developed during my doctoral research in the [Communication Acoustics Research Group] (http://spa.aalto.fi/en/research/research_groups/communication_acoustics/), Aalto, Finland. If you would like to reference the code, you can refer to my dissertation published here:

Description

This is a collection MATLAB routines that perform array processingtechniques on spatially transformed signals, commonly captured witha spherical microphone array. The routines fall into four maincategories:

obtain spherical harmonic (SH) signals with broadband characteristics,as much as possible,

generate beamforming weights in the spherical harmonic domain (SHD)for common signal-independent beampatterns,

demonstrate some adaptive beamforming methods in the SHD,

demonstrate some direction-of-arrival (DoA) estimation methods in theSHD,

demonstrate methods for analysis of diffuseness in the sound-field

Jeevan ek safar hai mp3 song free download. Jeevan Ek Sanghursh (1990) MP3 Songs. New 2 Old| Popular| A to Z| Z to A File. 1.Jeevan Ek Sanghursh Hai.mp3. Singer: Kavita Krishnamurthy, Mohammed Aziz 6.96 mb| 21955 Hits. 2.Mil Gayee O Mujhe Mil Gayee.mp3. Singer: Alka Yagnik, Amit Kumar 6.08 mb| 4433 Hits. 3.Bach Ke Tu Jayegi.

demonstrate flexible diffuse-field coherence modeling of arrays

The latest version of the library can be found at

Detailed demonstration of the routines is given in TEST_SCRIPTS.m and at

The library relies in the other two libraries of the author related toacoustical array processing found at:

They need to be added to the MATLAB path for most functions towork.

For any questions, comments, corrections, or general feedback, pleasecontact archontis.politis@aalto.fi

Table of Contents

Microphone Signals to Spherical Harmonic signals [refs 1-4]

The first operation is to obtain the SH signals from the microphonesignals. That corresponds to two operations: a matrixing of the signalsthat performs a discrete spherical harmonic transform (SHT) on thesound pressure over the spherical array, followed by an equalization stepof the SH signals that extrapolates them from the finite array radius toarray-independent sound-field coefficients. This operation is limited byphysical considerations, and the inversion should be limited to avoidexcessive noise amplification in the SH signals. A few approaches areincluded:

- Theoretical-array-based filters

- Measurement-based filters

The measurement-based approach is demonstrated using directional responses of an Eigenmike array [ref2], measured in the anechoic chamber of the Acoustics laboratory, Aalto University, Finland. The measurements were conducted by the author and Lauri Mela, summer 2013.

Signal-independent Beamforming in the Spherical Harmonic Domain [refs 5-10]

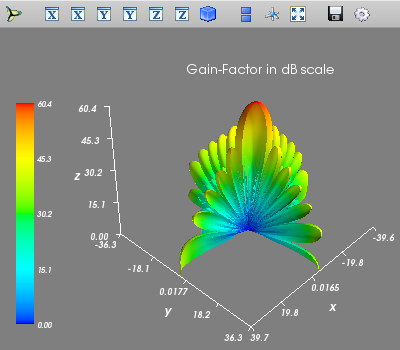

After the SH signals have been obtained, it is possible to performbeamforming on the SHD. In the frequency band that the SH signals areclose to the ideal ones, beamforming is frequency-independent and itcorresponds to a weight-and-sum operation on the SH signals. Thebeamforming weights can be derived analytically for various commonbeampatterns and for the available order of the SH signals. Beampatternsmaintain their directivity for all directions in the SHD, and if they areaxisymmetric their rotation to an arbitrary direction becomes verysimple.

The following axisymmetric patterns are included in the library:

- cardioid [single null at opposite of the look-direction]

- supercardioid (up to 4th-order) [ref5][maximum front-to-back rejection ratio]

- hypercardioid/superdirective/regular/plane-wave decomposition beamformer[maximum directivity factor]

- max-energy vector (almost super-cardioid) [ref6][maximum intensity vector under isotropic diffuse conditions]

- Dolph-Chebyshev [ref7][sidelobe level control]

- arbitrary patterns of differential form [ref8][weighted cosine power series]

- patterns corresponding to real- and symmetrically-weighted linear array [ref9]

Matlab Program For Dolph Chebyshev Array Meanings

and some non-axisymmetric cases:

- closest beamformer to a given directional function[best least-squares approximation]

- acoustic velocity beamformers for a given spatial filter [ref10][capture the acoustic velocity of a directionally-weighted soundfield]

Signal-Dependent and Parametric Beamforming [refs 11-12]

Contrary to the fixed beamformers of the previous section, parametric andsignal-dependent beamformers use information about the signals ofinterest, given either in terms of acoustical parameters, such asDoAs of the sources, or extracted through the second-order statistics ofthe array signals given through their spatial covariance matrix (or acombination of the two)

The following examples are included in the library:

- plane-wave decomposition (PWD) beamformer at desired DoA, withnulls at other specified DoAs

- null-steering beamformer at specified DoAs with constraint onomnidirectional response

- minimum-variance distortioneless response (MVDR) in the SHD

- linearly-constrained minimum variance (LCMV) beamformer in the SHD

- informed parametric multi-wave multi-channel Wiener spatial filter(iPMMW) in the SHD [ref12]

Direction-of-Arrival (DoA) Estimation in the SHD [refs 13-17]

Direction of arrival (DoA) estimation can be done by a steered-responsepower approach, steering a beamformer on a grid and checking for peaks onthe power output [refs 13-14,16-17] or by a subspace approach such as MUSIC.Another alternative is to utilize the acoustic intensity vector [ref 15],obtained from the first-order signals, which its temporal and spatial statisticsreveal information about presence and distribution of sound sources.

A few examples of DoA estimation in the SHD are included in the library:

- Steered-response power DoA estimation, based on plane-wavedecomposition (regular) beamforming [refs 13,17]

- Steered-response power DoA estimation, based on MVDR beamforming [ref 14]

- Acoustic intensity vector DoA estimation [ref 15]

- MUSIC DoA estimation in the SHD [refs 14,16]

Diffuseness and Direct-to-diffuse Ratio (DDR) Estimation [refs 18-22]

Diffuseness is a measure of how close a sound-field represents idealdiffuse field conditions, meaning a sound-field of plane waves with randomamplitudes and incident from random directions, but with constant meanenergy density, or equivalently constant power distribution from alldirections (isotropy). There are measures of diffuseness that considerpoint to point quantities, here however we focus on measures thatconsider the directional distribution, and relate to the SHD. In the caseof a single source of power $P_s$ and an ideal diffuse field of power $P_d$,diffuseness $psi$ is directly related to the direct-to-diffuse ratio (DDR)$Gamma = P_s/P_d$, with the relation $psi = P_d/(Ps+Pd) = 1/(1+Gamma)$.In the case of multiple sources $P_i$, an ideal diffuseness can be definedas $psi = P_d/(P_d + sum_i P_i)$ and DDR as $Gamma = sum_i P_i/P_d$.Diffuseness is useful in a number of tasks - for acoustic analysis, forparametric spatial sound rendering of room impulse responses (e.g SIRR)or spatial sound recordings (e.g. DirAC), or for constructing filters fordiffuse sound suppresion.

The following diffuseness measures are implemented

- intensity-energy density ratio (IE) [ref.18]

- temporal variation of intensity vectors (TV) [ref.19]

- spherical variance of intensity DoAs (SV) [ref.20]

- directional power variance (DPV) [ref.21]

- COMEDIE estimator (CMD) [ref.22]

Diffuse-field Coherence of Directional Sensors/beamformers [refs 8,23]

The diffuse-field coherence (DFC) matrix, under isotropic diffuse conditions,is a fundamental quantity in acoustical array processing, since it modelsapproximately the second-order statistics of late reverberant sound between array signals, andis useful in a wide variety of beamforming and dereverberation tasks.The DFC matrix expresses the PSD matrix between the array sensors, orbeamformers, for a diffuse-sound field, normalized with the diffuse soundpower, and it depends only on the properties of the microphones,directionality, orientation and position in space.

Analytic expressions for the DFC exist only for omnidirectional sensorsat arbitrary positions, the well-known sinc function of thewavenumber-distance product, and for first-order directional microphoneswith arbitrary orientations, see e.g. [ref18].For more general directivities, [ref.8] shows that the DCM can bepre-computed through the expansion of the microphone/beamformer patternsinto SHD coefficients.

References

References mentioned in the code and the examples:

Moreau, S., Daniel, J., Bertet, S., 2006,3D sound field recording with higher order ambisonics-objective measurements and validation of spherical microphone.In Audio Engineering Society Convention 120.

Mh Acoustics Eigenmike, https://mhacoustics.com/products#eigenmike1

Bernsch?tz, B., P?rschmann, C., Spors, S., Weinzierl, S., Verst?rkung, B., 2011.Soft-limiting der modalen amplitudenverst?rkung bei sph?rischen mikrofonarrays im plane wave decomposition verfahren.Proceedings of the 37. Deutsche Jahrestagung f?r Akustik (DAGA 2011)

Jin, C.T., Epain, N. and Parthy, A., 2014.Design, optimization and evaluation of a dual-radius spherical microphone array.IEEE/ACM Transactions on Audio, Speech, and Language Processing, 22(1), pp.193-204.

Elko, G.W., 2004. Differential microphone arrays.In Audio signal processing for next-generation multimedia communication systems (pp. 11-65). Springer.

Zotter, F., Pomberger, H. and Noisternig, M., 2012.Energy-preserving ambisonic decoding. Acta Acustica united with Acustica, 98(1), pp.37-47.

Koretz, A. and Rafaely, B., 2009.Dolph?Chebyshev beampattern design for spherical arrays.IEEE Transactions on Signal Processing, 57(6), pp.2417-2420.

Politis, A., 2016.Diffuse-field coherence of sensors with arbitrary directional responses. arXiv preprint arXiv:1608.07713.

Hafizovic, I., Nilsen, C.I.C. and Holm, S., 2012.Transformation between uniform linear and spherical microphone arrays with symmetric responses.IEEE Transactions on Audio, Speech, and Language Processing, 20(4), pp.1189-1195.

Politis, A. and Pulkki, V., 2016.Acoustic intensity, energy-density and diffuseness estimation in a directionally-constrained region.arXiv preprint arXiv:1609.03409.

Thiergart, O. and Habets, E.A., 2014.Extracting reverberant sound using a linearly constrained minimum variance spatial filter.IEEE Signal Processing Letters, 21(5), pp.630-634.

Thiergart, O., Taseska, M. and Habets, E.A., 2014.An informed parametric spatial filter based on instantaneous direction-of-arrival estimates.IEEE/ACM Transactions on Audio, Speech, and Language Processing, 22(12), pp.2182-2196.

Park, M., and Rafaely, B., 2005.Sound-field analysis by plane-wave decomposition using spherical microphone array.The Journal of the Acoustical Society of America, 118(5), 3094-3103.

Khaykin, D., and Rafaely, B., 2012.Acoustic analysis by spherical microphone array processing of room impulse responses.The Journal of the Acoustical Society of America, 132(1), 261-270.

Tervo, S., 2009.Direction estimation based on sound intensity vectors.In 17th European Signal Processing Conference, (EUSIPCO 2009).

Tervo, S., and Politis, A., 2015.Direction of arrival estimation of reflections from room impulse responses using a spherical microphone array.IEEE/ACM Transactions on Audio, Speech and Language Processing (TASLP), 23(10), 1539-1551.

Delikaris-Manias, D., Pavlidi, S., Pulkki, V., and Mouchtaris, A., 2016.3D localization of multiple audio sources utilizing 2D DOA histogramsIn European Signal Processing Conference (EUSIPCO 2016).

Merimaa, J. and Pulkki, V., 2005.Spatial impulse response rendering I: Analysis and synthesis.Journal of the Audio Engineering Society, 53(12), pp.1115-1127.

Ahonen, J. and Pulkki, V., 2009.Diffuseness estimation using temporal variation of intensity vectors.In 2009 IEEE Workshop on Applications of Signal Processing to Audio and Acoustics (WASPAA).

Politis, A., Delikaris-Manias, S. and Pulkki, V., 2015.Direction-of-arrival and diffuseness estimation above spatial aliasing for symmetrical directional microphone arrays.In 2015 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP).

Gover, B.N., Ryan, J.G. and Stinson, M.R., 2002.Microphone array measurement system for analysis of directional and spatial variations of sound fields.The Journal of the Acoustical Society of America, 112(5), pp.1980-1991.

Epain, N. and Jin, C.T., 2016.Spherical Harmonic Signal Covariance and Sound Field Diffuseness.IEEE/ACM Transactions on Audio, Speech, and Language Processing, 24(10), pp.1796-1807.

Elko, G.W., 2001.Spatial coherence functions for differential microphones in isotropic noise fields.In Microphone Arrays (pp. 61-85). Springer Berlin Heidelberg.